Search engines use web crawling to update their web content by indexing other sites’ web content. A web crawler, know too as a spider or spiderbot, is an Internet bot that systematically browses the World Wide Web for the Web indexing of pages’ URL.

What Does the Web Crawler Do?

Web crawlers copy pages for processing by the search engine, which indexes the downloaded pages so users can search more efficiently. Web crawlers can usually discover most of your site’s pages if they are correctly linked. However, with the use of sitemap files, you can improve the crawling of your website pages.

If your site is new and has few external links to it, or the site uses rich media content, web crawlers crawl the web by following links from one page to another. Even worse, if your site is extensive with a vast archive of content pages that are isolated or not well linked to each other, web crawlers might overlook crawling some of your new or recently updated pages. As a result, Search Engines might not discover your pages if no other sites link to them. In any of the above cases, a sitemap file can provide additional information to the web crawler to ensure that Search Engines do not overlook some of the pages of your website.

What is a Sitemap File and How is Structured?

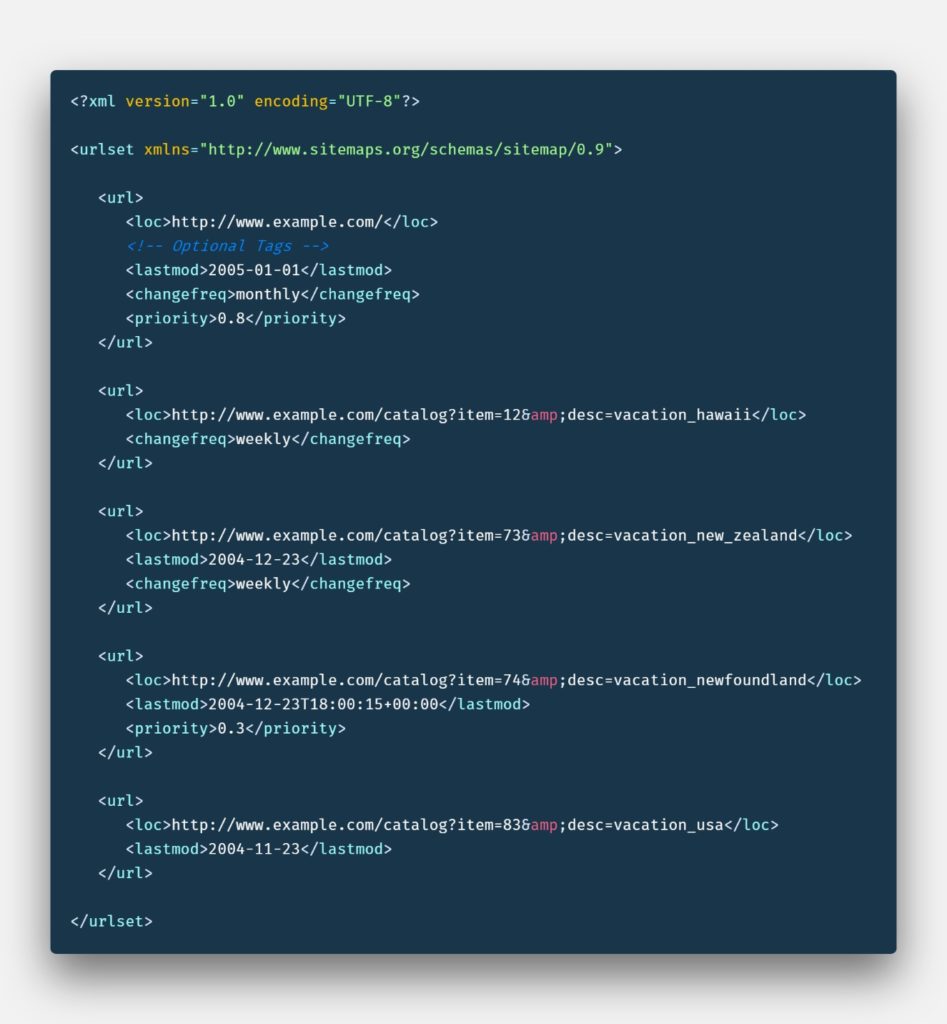

A sitemap is an XML tags file that lists URLs for a site along with additional metadata about each URL; like when the page was last updated, how often it usually changes, and how important it is (priority) relative to other URLs in the site. We can have different sitemap files that provide information about the pages, videos, images, and other files on your website, and the relationships between them, so that search engines can more intelligently crawl your website. The sitemap tells the crawler which files are important in your website and also provides valuable information about these files. Web crawlers that support sitemaps pick up all URLs in the sitemap and learn about those URLs using the associated metadata.

A sitemap protocol format consists of XML tags. The sitemap must include:

A sitemap protocol format consists of XML tags. The sitemap must include:

- An opening <urlset> with the namespace (protocol standard) within the <urlset> tag.

- An opening <url> tag entry for each URL, as a parent XML tag.

- A <loc> tag as a child entry of each <url> parent tag with the url of the pages.

- A closing </url> tag,

- An opening <url> tag entry for each URL, as a parent XML tag.

- A closing </urlset> tag.

Following is an illustration of a basic sitemap file protocol and structure with some optional tags. For more information about the XML tag definitions in the sitemap protocol, visit https://www.sitemaps.org/protocol.html.

All URLs listed in your sitemap file must reside on the same domain as the sitemap file. For instance, if the sitemap file is located at https://www.mydomain.com/sitemap.xml, it can’t include URLs from https://subdomain.mydomain.com. If the sitemap file is located at https://www.mydomain.com/myfolder/sitemap.xml, it can’t include URLs from https://www.mydomain.com. The sitemap file protocol should be generated using a UTF-8 character encoding method. It is strongly recommended to place the sitemap at the root directory of your HTML server; that is, put it at https://www.mydomain.com/sitemap.xml

The sitemaps should be no larger than 50MB and can contain a maximum of 50,000 URLs. These limits help to ensure that your web server does not get bogged down serving huge files. If your site contains more than 50,000 URLs or your sitemap is bigger than 50MB, you must create multiple sitemap files and use a sitemap index file. If your site is small but you plan on growing beyond 50,000 URLs or a file size of 50MB it is recommended to start using sitemap index files.

In the <loc> tag, you need to include the protocol (for instance, HTTP or HTTPS) of your page URL. You also need to include a trailing slash in your URL if your web server requires one. For example, https://www.mydomain.com/ is a valid URL for a Sitemap, whereas www.mydomain.com is not. It is important to only list one version of your page’s URL in your sitemaps. This mean, only list HTTPS URLs or only HTTP URLs but not both. Including multiple versions of the pages URL may result in incomplete crawling of your site by the search engine.

Two common ways to have the sitemap file available to the search engines is to submit it via the search engines submission tool or by inserting a line like, Sitemap: https://www.mydomain.com/sitemap.xml, anywhere in your website robots.txt file, which specify the path to your sitemap file. A robots.txt file tells a search engine which part of your website you do not want to include for indexing, and the sitemap tells these search engines where you want them to go.

Why is a sitemap file important?

There is no downside for having a sitemap file, and having one can improve your SEO, so it’s highly recommended to have one in your websites. Sitemaps are important for SEO because they make it easier for the search engines to find your site’s pages. This is important because a search engine ranks web PAGES, not just websites. You can think of a sitemap like a blueprint for your house, were each web page is a room, making it easy for the search engine to quickly and easily find all the rooms within your house.

There is no downside for having a sitemap file, and having one can improve your SEO, so it’s highly recommended to have one in your websites. Sitemaps are important for SEO because they make it easier for the search engines to find your site’s pages. This is important because a search engine ranks web PAGES, not just websites. You can think of a sitemap like a blueprint for your house, were each web page is a room, making it easy for the search engine to quickly and easily find all the rooms within your house.

There are a variety of SEO tricks and tips that will help to optimize your website, and one of those is the use of sitemap files. The importance of sitemaps file sometimes is greatly underestimated. Again, a sitemap is a literal map of your website. They make navigating your website easier, and when you keep an updated sitemap file for your website, it is suitable not only for yourself but for search engines as well. Sitemap files are an essential way for a site to communicate with a search engine.

How Will a Website Benefit from a Sitemap File?

The use of a sitemap file has so many benefits. Not only does it give a map to navigate your website, but it gives better visibility to search engines. A sitemap file offers the opportunity to link search engines with any changes made to your website pages immediately after they happen. You cannot expect search engines to rush to account for the changes on your pages, but they will undoubtedly change the edits that you have made faster as compared to when a website does not have a sitemap file.

When there is a sitemap link to a website, and it is submitted to search engines, you will rely on external links less when search engines are bringing visitors to your site. When you have a sitemap file and submit it to the search engines, you rely less on external links that will bring search engines to your website.

The sitemap file well even aid in helping fix poor internal linking. For example, if there are accidentally broken links or orphan pages that cannot be reached. It should be noted that it is not a wise idea to rely on a sitemap rather than just fixing your errors. You must remember that the use of a sitemap file doesn’t guarantee that all the URLs in your sitemap will be crawled and indexed, as search engines processes rely on complex algorithms to schedule crawling. However, in most cases, your website will benefit from having a sitemap file, and you will never be penalized for having one.

Authorship: Arturo S.