Every SEO consultant comes across the search engine optimization nightmare of having to deal with a rogue developer at some point in their career.

Below are some outlines of what constitutes a rogue developer and the impact that it can have on your client’s search engine optimization campaign’s efforts to succeed and become a viable online PROFITABLE entity.

Here, at That Company, we specialize not only in providing not only white label PPC service but also search engine optimization services directly to clients but we also run a very healthy local SEO agency.

As a white label services provider, we act as team members of our white label partners companies. We can work directly with our white label partner’s clients under their brand name or we can work for the white label partner as a consultant and the white label partner can then pass along our recommendations for implementation by them or by their client.

Recently, one of our very strong white label search engine optimization partners brought us a new client that we would work directly with under the white labels brand name. It became evident during our initial onboarding call with the end-user client that we were dealing with the search engine optimization nightmare of dealing with a rogue developer.

The contract was executed on April 4, 2021, and we have yet to see most of our recommendations implemented on the client’s behalf.

The contract was executed on April 4, 2021, and we have yet to see most of our recommendations implemented on the client’s behalf.

In prepping for this initial onboarding call I do an initial analysis review in all of their documents to formulate a strategic plan.

One of those documents is the results of a site audit tool crawl which revealed the following:

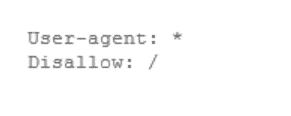

- txt Blocking Crawlers – 1 error

- XML not Found – 1 error

- Missing Sitemap.xml Reference – 1 error

From first appearances, it looked like I was going to be able to start with a standard on-page optimization strategy.

And, that is what I prepared to present to the client on my initial call.

We were to find out later why there were little site audit errors in our reporting data but we will cover that below.

Search Engine Optimization Campaign Management On-Boarding Procedures

During the search engine optimization client onboarding call process we seek to gain several items that are mission-critical to our success for the client.

We ask to be granted access to their website administration and their website server files.

After about a month, no access was granted by the developer, and the client, as we found out, never had access to their website.

Further conversations later we found out that the client had been with this developer and so-called SEO expert for 15 years.

There was not even a simple Google Analytics setup for the site in all that time.

More evidence of a rogue developer.

When we took on the account the client had 349 keywords ranking in the top 100 Google SERPs, only 1 keyword in position 1, their brand name, and only 11 keywords on page 1.

Rather unhealthy results to be able to be a success online in a very limited industry.

It became known that the client was trying to divorce themselves from this developer and had even sought out legal advice. That advice was “do this very gently”.

Even the client’s legal advisor knew that this developer had the client by the snardleys.

[bctt tweet=”Every SEO consultant comes across the search engine optimization nightmare of having to deal with a rogue developer at some point in their career.” username=”ThatCompanycom”]What About That Robots.txt Block Mentioned Above?

Well, we only saw three errors generated by our site audit tool when typically they’re hundreds if not thousands. Ranging from:

- missing meta descriptions

- missing page titles

- duplicate meta descriptions

- duplicate page titles

- long page titles

- short page titles

- long meta descriptions

- short meta descriptions

- missing h1 tags

- multiple h1 tags

- redirected internal URLs

- broken external links

- broken internal links

- missing canonical tags

- and, many more…

But, there were none of these errors reported.

A visual review of the site page by page indicated that this site could not be that perfect.

So, we revisited the block in the robots.txt file and sure enough there it was.

The developer was blocking Google and our site audit tools so that no pages were being crawled except for random links the site had picked up over time allowing Google to crawl the linked-to page but no other which is how they had any pages being indexed.

If this isn’t rogue web development we surely evidently do not know what rogue web development is but we had recognized the signs at the very beginning.

Once we got the web developer to remove the crawl block the whole search engine optimization landscape drastically changed.

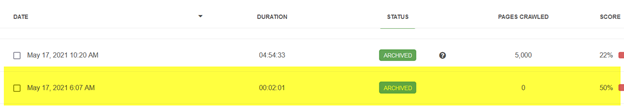

We had crawled the site with our base site audit tool early in the day on May 17, 2021, and still had no pages crawled and a site score of 50% as seen in the image below.

Later that same day, the crawl block was lifted with the following results of reaching the crawl limit of 5,000 pages and reducing the site score to 22%.

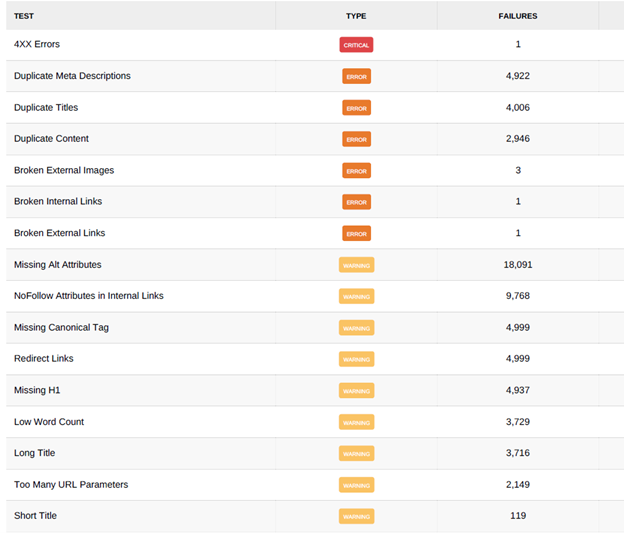

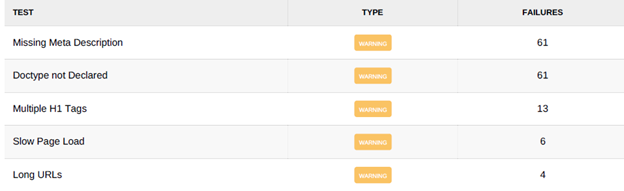

Please keep in mind that our base site audit crawl tool’s limit is 5,000 pages per crawl. Below are several screenshots of the results after the crawl block was lifted.

Screenshot 1:

Screenshot 2:

By all measures, this site was a Search engine optimization nightmare. All because of a rogue developer.

Many of the errors reported were due to duplicated pages or just missing pages altogether. Links in the website’s footer and top menus lead to nonexistent pages.

Keeping in mind that our base site audit crawl tool is limited to 5,000 pages per crawl you will notice that there are several issues reported above that reach that limit.

Specifically:

- Duplicate Meta Descriptions

- Missing Canonical Tags

- Redirected Links

When reviewing the data we found, for example, the redirected links were coming from one footer link or one top menu link.

We provided instructions for the developer to correct which typically took three to four emails with the client who then provided the recommendation to the developer.

With the limit of 5,000 pages crawled per crawl, every time that the web developer would implement a recommendation, the next crawl would reveal that there were 5,000 new issues related to the original issue which has repeated itself time over time for each issue.

Since early June, no recommendations have been implemented and the client continues to struggle with their SEO campaign.

Since early June, no recommendations have been implemented and the client continues to struggle with their SEO campaign.

This ultimately resulted in the client asking us as their SEO consultants if they should just start over.

Our answer was a hearty YES!

We reviewed with the client how many recommendations we had made over the last several months. That nothing had been implemented and the client’s website was going nowhere until we could get control over the website’s destiny.

This resulted in our white label partner receiving a rewarding contract for re-building the website and providing complete access to the client and our white label SEO team.

The final takeaway for all website owners is to OWN YOUR OWN WEB SITE!

Two of the first questions that you need to ask when seeking a web developer are:

- Will I have admin access to my website?

- Will I have admin access to my website’s server files?

If the answer to either of these is no then you need to walk away and avoid the search engine optimization nightmare of how a rogue web developer can ruin your search engine optimization campaign and ultimately your online business.