On a previous blog, we discussed the benefits of the sitemap.xml file on our website. On this blog, we are going to discuss the importance of the /robots.txt file on our website.

On a previous blog, we discussed the benefits of the sitemap.xml file on our website. On this blog, we are going to discuss the importance of the /robots.txt file on our website.

What Is /Robots.Txt?

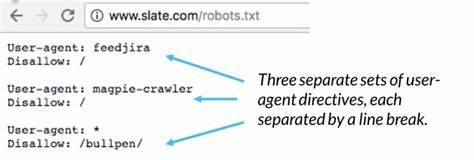

The /robots.txt is a text file located in the root directory of the webserver of our website. It is an important file because it is used to give instructions about the web content of our site to the web robots. Web Robots, Crawlers, or Spiders are programs used by the search engines to index the web content of a website. These given instructions are called The Robots Exclusion Protocol.

The /robots.txt file is a public file that can be accessed by typing a URL like https://wwwmysite.com/robots.txt. Anyone can see the content of the file, and the locations you don’t want the web robots to access. This means that the /robots.txt file should not be used to hide critical information on your website.

[bctt tweet=”The /robots.txt is the first thing that the search engine robots look for when visiting a website…” username=”ThatCompanycom”]Robot.txt File Syntax and Content

The instructions given in the /robots.txt file include the location of our site sitemap, what directory we want and don’t want the web robots to access, and what pages we want and don’t want the web robot to access. A simple syntax instruction of the /robots.txt file is:

User-agent: *

Disallow: /

The “User-agent: *” line means that the instructions in the file apply to all robots. The “Disallow: /” tells the robot to not crawl any pages on the site.

Other standard instructions in the /robots.txt can be:

- Allow full access to the web site content but block a folder or page:

User-agent: *

Disallow: /folder/

Disallow: /page.html

- Allow full access to the web site content but block a file:

User-agent: *

Disallow: /file-name.pdf

- Allow full access to the web site content but block the crawl of a specific web robot:

User-agent: *

Disallow:

User-agent: Googlebot

Disallow: /

For a list of some of the web robots visit https://www.robotstxt.org/db.html

We need to separate the “Disallow” line for every URL prefix we want to exclude. Globing and regular expression are not supported in either the User-agent or Disallow lines. The ‘*’ in the User-agent field is a special value meaning “any robot.”

Correct:

User-agent: *

Disallow: /file-name.pdf

Disallow: /folder1/

Disallow: /folder2/

Error:

User-agent: *

Disallow: /file-name.pdf

Disallow: /folder1/ /folder2/

Disallow: /folder3/*

Why Is Robots.txt It Important?

We should know the importance of the /robots.txt because improper usage of the file can hurt the ranking of a website. It is the first file that the search engine robot looks for when visiting a website.

The /robots.txt file has instructions that control how the search engine robots see and interact with the site webpages. This file, as well the bots with which it interacts, are fundamental elements of how a search engine work.

The /robots.txt is the first thing that the search engine robots look for when visiting a website because it wants to know if it has permission to access the site content and what folder, pages, and files can crawl.

Some of the reasons to have a /robots.txt file on our website can include:

- We have content we want to block from search engines.

- There are paid links or advertisements that need special instructions for different web robots.

- We want to limit access to our site from reputable robots.

- We are developing a live site, but you do not want search engines to index it yet.

- Some or all of the above is true, but we do not have full access to our web server and how it is configured.

Other methods can control the above reasons, however, the /robots.txt file is a right and straightforward central place to take care of them. If we do not have a /robots.txt file on our website, the search engine robots will have full access to our site.

What Is the Meaning of The Instruction’s KEY Words?

“User-agent:” -> Specify what instructions to apply to a specific robot. A statement like “User-agent: *” means the directives apply to all robots. A statement like “User-agent: Googlebot” means the instructions apply to just Googlebot.

“Disallow:” -> Tell the web robots what folders they should not look at. This means that if, for example, you do not want search engines to index the images on your site, then you can place those images into one folder and exclude it like “Disallow: /images/.”

“Allow:” -> Tell a robot that it is okay to see a file in a folder that has been “Disallowed” by other instructions. For example:

User-agent: *

Disallow: /images/

Allow: /images/myphoto.jpg

“Sitemap:” -> Tell a robot the location of the website sitemap file. For example:

User-agent: *

Sitemap: https://www.mysite.com/sitemap.xml

Disallow: /images/

Allow: /images/myphoto.jpg

Robots Meta tag, is it important?

We have discussed the importance and use of the /robots.txt file on our website, but there is another way to control the visiting of web robots to our sites. This other way is through a Robots Meta tag.

<meta name=”ROBOTS” content=”NOINDEX, FOLLOW”>

Like any <meta> tag, it should be placed in the <head> section of the HTML page. Also, it is best to put it on every page on your site because a robot can encounter a deep-link to any page on your site.

Like any <meta> tag, it should be placed in the <head> section of the HTML page. Also, it is best to put it on every page on your site because a robot can encounter a deep-link to any page on your site.

The “name” attribute must be “ROBOTS”.

Valid values for the “content” attribute are: “INDEX,” “NOINDEX,” “FOLLOW,” “NOFOLLOW.” Multiple comma-separated values are allowed, but obviously, only some combinations make sense. If there are no robots <meta> tag, the default is “INDEX, FOLLOW”, so there’s no need to spell that out. Other possible uses of the robots <meta> tag is:

<meta name=”ROBOTS” content=”INDEX, NOFOLLOW”>

<meta name=”ROBOTS” content=”NOINDEX, NOFOLLOW”>

The use of the meta tag is more related to specific pages that we do not want web robots to crawl. It is not commonly used, and it is more accurate and straightforward to control the visiting web robots through the /robots.txt file.

Conclusion

We have seen the importance of the /robots.txt on our website, their syntax, and what we can do with it in the benefits of our website. We have seen, too, the use of the robots meta tag and its limitations.

However, if we use it, we need to make sure it is being used properly. An incorrect /robots.txt file can block the web robots from indexing our website pages, or more critically, we need to ensure we are not blocking pages that the search engines need to rank.

—–

Written by Arturo S.